The Matrix: Virtual Reality and Bimanual Haptic Robotics

Video Showcasing the Matrix

The Matrix is a one-of-a-kind custom built virtual reality and haptic robotic simulation system. It was built as part of our research with the RAIN (Robotics and AI in Nuclear Hub) and forms the centre of the labs ongoing research on virtual reality, haptic robotics and human sensory perception. It showcases what can be achieved by interdisciplinary collaboration across academia and industry.

The Overall Challenge

In many instances a humans need to interact efficiently with 3D+ data i.e., data with three or more dimensions. Examples include the remote robotic telepresence, delineation of cancerous tumours in medical imaging data, exploration of 3D models in architecture and construction, surgical training and more broadly data science in general. However, our ability to generate this type of data has been dramatically outpaced by our ability to interact naturally and efficiently with it.

This is abundantly clear within seconds of using any of today’s commercially available virtual reality devices or when watching operators using state-of-the-art industrial robotic telepresence systems. In both movement touch, interaction and control are limited and rudimentary at best. This dramatically curtails the promise of such technologies.

For example, even the simple act of reaching to grasp and pick up an object, something a child can do effortlessly, can involve holding a handheld controller, reaching to an object and pressing a button to on the controller to “hold” the object, moving the controller, then releasing the button to “release” the object in a different location. All the time interacting with thin air, as the objects have no weight or material properties.

In the commercial setting this limits user enjoyment and uptake of such systems; in the industry setting, in addition to this, it results in slow, laborious and high-cost operations for even simple tasks.

The video below of snooker player Ronnie O'Sullivan nicely demonstrates the importance of an intuitive means of touch, interaction and control with 3D+ data.

Snooker player Ronnie O'Sullivan having a go of virtual reality

The Matrix

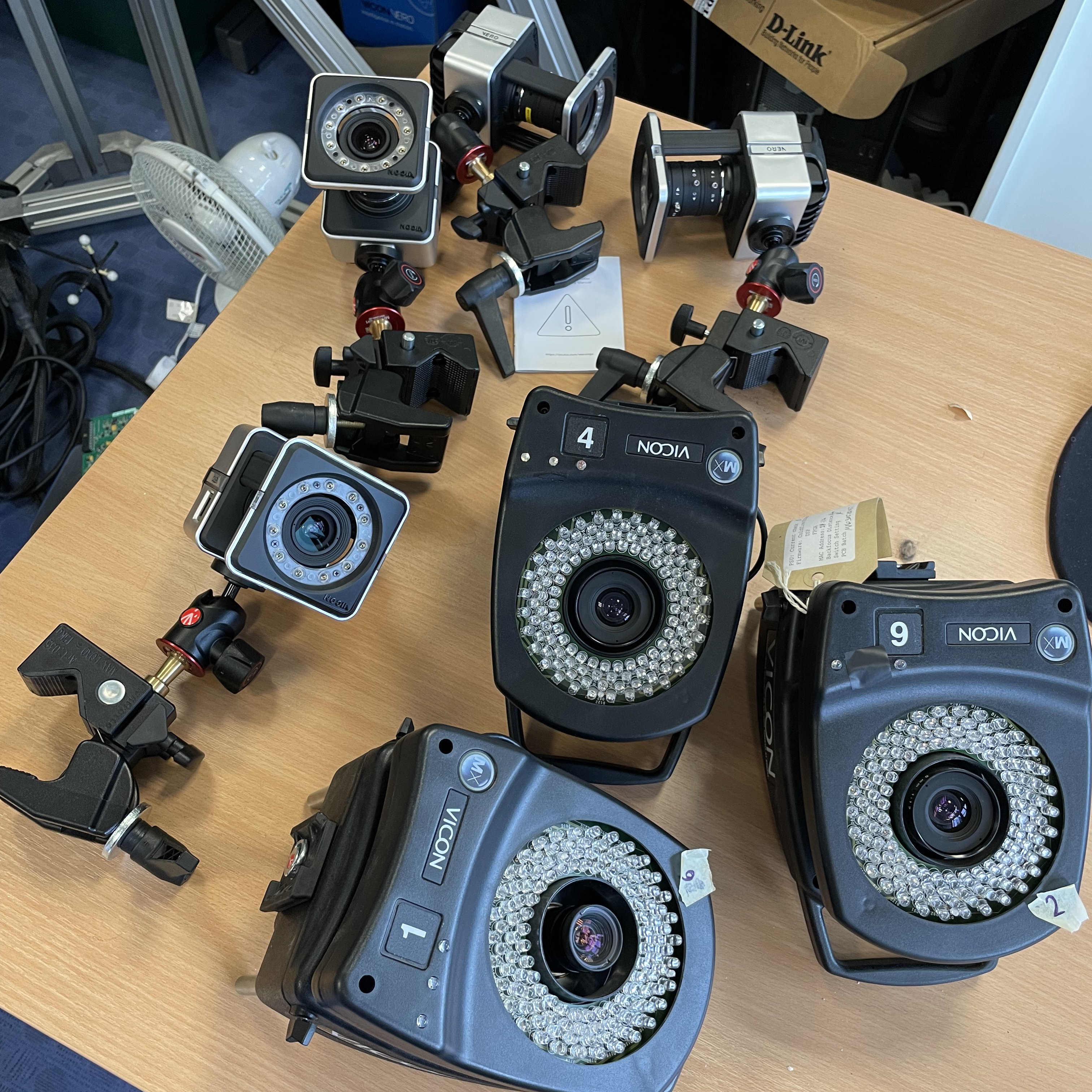

To address this broad challenge my lab has developed a virtual reality and haptic robotic system that we call "The Matrix". The Matrix is comprised of four custom built 3DOF haptic robotic force-feedback devices, six Vicon Vero 2.2 motion tracking cameras, and an Oculus Quest 2 virtual reality head mounted display. The visual environment is simulated in Unreal Engine and the haptic environment and physics through the TOIA middleware plugin for Unreal Engine developed by Generic Robotics. TOIA allows high-fidelity haptic simulation, including soft body mechanics.

The Matrix was built as part of our funded research with the RAIN Hub. For this our focus was on simulating nuclear teleoperation procedures, such as "pick up and post" and "sort and segregate" within Nuclear gloveboxes. With the RAIN Hub extension, we diversified our use cases to include medical haptics. This integrated work already going on in the lab in collaboration with Dr Alan McWillaims group at the University of Manchester / Christie Hospital Manchester on how the provision of VR and/or haptics could help improve the detection and delineation of tumours in medical imaging data.

A user in the Matrix

Soft-body mechanics in TOIA

Our Approach

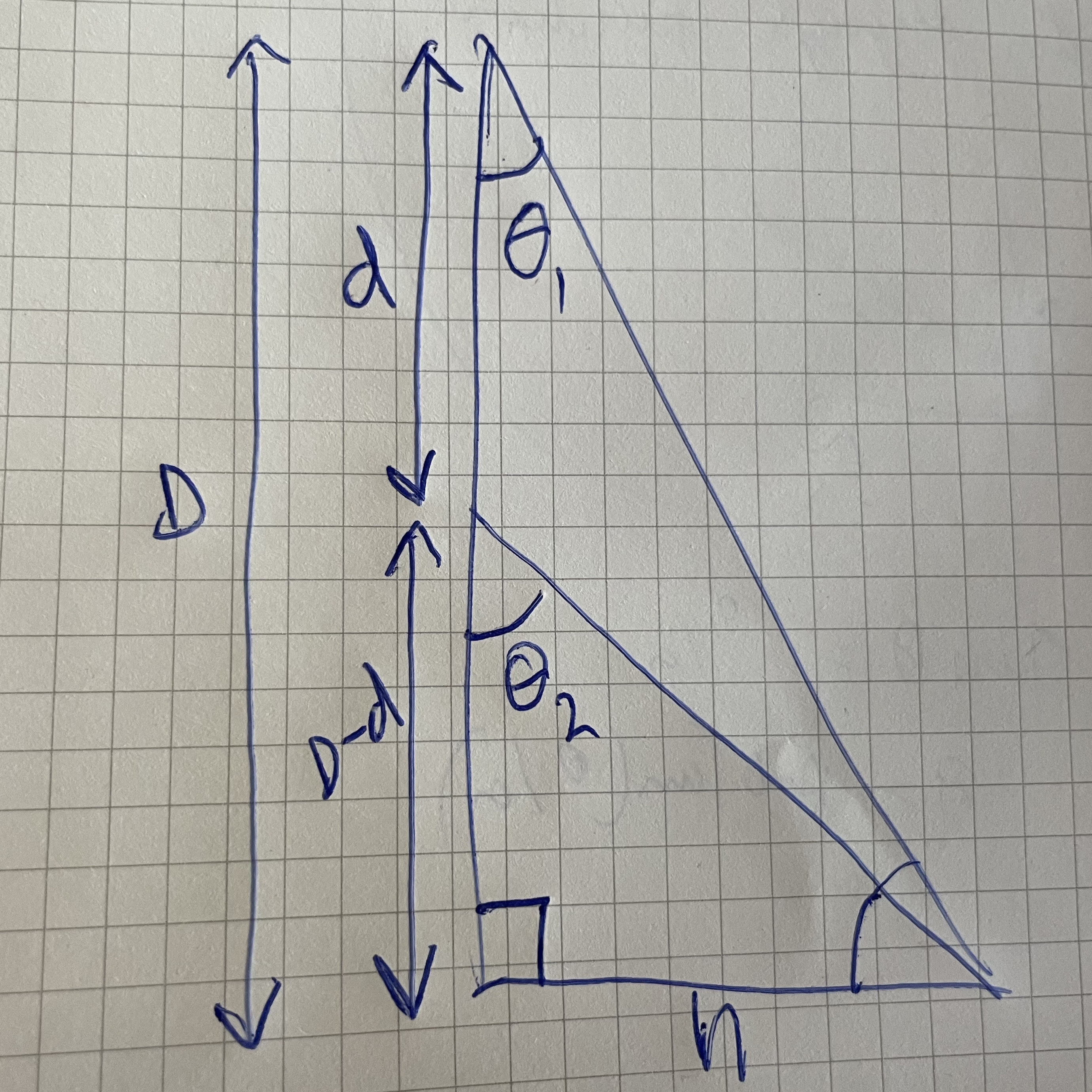

The fundamental principle guiding our research is that with a human user in the control loop it is critical to understand how that users sensory systems are processing incoming information for the real-time adaptive control of behaviour. Without this understanding, it is impossible to design effortless and intuitive interfaces where a user interacts with 3D+ data, whether these are in a consumer or industrial setting. This approach is summarised well in a talk titled "Understanding sensorimotor optimisation of bimanual telerobotics” given by the head of the lab, Dr. Peter Scarfe, at one of the Human Robot Interaction (HRI) seminars run as part of the work of the RAIN Hub. A link to the session includig the talk can be found here.

The Matrix Team

Associate. Prof. Peter Scarfe: Lead of the Vision and Haptics Laboratory, School of Psychology, University of Reading.

Dr. Alastair Barrow: CEO of Generic Robotics, honorary member of the Vision and Haptics Lab and research collaborator

Prof. William Harwin: Professor of Interactive and Human Systems, Biomedical Engineering, University of Reading.

Rea Gill: PhD student, 3+1 PhD studentship funded by SeNSS and EUROfusion.

Ali Altun: PhD student, funded by the Turkish Ministry of Education.

Jake Tomaszewski: Research collaborator, currenting doing a MSc at Imperial College London.